ELA Teachers’ Latest Challenge: Spotting the Bot

Photo by Gustavo Fring

I remember the first time I received an essay written by ChatGPT. My student, a reluctant writer, wrote in sentence fragments and avoided commas like they were a disease. But his latest essay read like he’d swapped brains with a grad student.

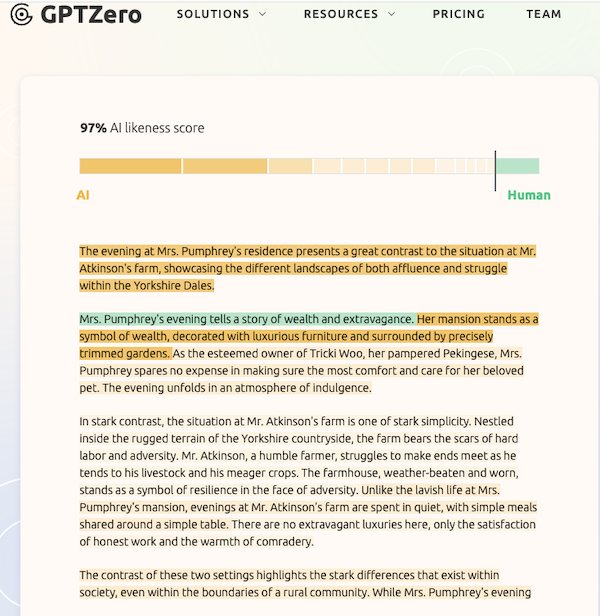

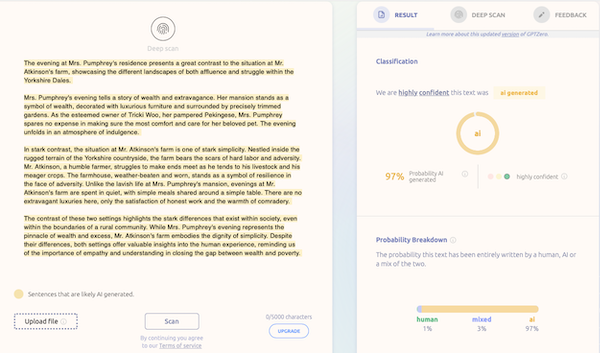

His sentences typically read like this one:

Is that Uncle does not try to encourage or help Herriot but instead finds enjoyment in seeing Herriot struggle and.

But, two weeks later, his essay featured sentences like these:

The evening at Mrs. Pumphrey's residence presents a great contrast to the situation at Mr. Atkinson's farm, showcasing the different landscapes of both affluence and struggle within the Yorkshire Dales.

I knew the likely explanation. The student had used ChatGPT to write his essay.

Yet, like so many other ELA teachers, I had two problems to face before I confronted him about using ChatGPT. First, I needed proof that he used an Artificial Intelligence bot to write his essay. And, second, the Internet now bristles with an array of tools promising to generate writing at any grade level and make it sound human. Gulp. Welcome to teaching ELA in the AI Era.

How Does ChatGPT Work?

Artificial Intelligence (AI) uses Large Language Models that hoover up every text on the Internet to learn. The first and arguably the best AI platform is ChatGPT. Chatbots—or simply bots—use billions of sentences to predict which words (or punctuation) comes next. This feature enables a bot to respond to commands in regular English, which it uses to answer questions—and write essays.

Spotting the Bot ≠ Proof

For those of us who have taught for more than a decade, this battle with technology feels like dejà vu all over again. Back in the early 2000s, students used the Internet to cobble together writing assignments from online sources. For years, teachers struggled to come up with definitive evidence of plagiarism. Then Turnitin.com and iThenticate came along. (I recall Turnitin revealing that one group’s semester-long project was 99.8% plagiarized, leaving the title and we argue as practically the only original words in 20 pages.)

Photo by Matheus Bertelli

Now we’re back in a similar position. We know when students’ writing shows consistent signs of progress. We can even spot when students genuinely arrive at a quantum leap in their learning. But AI bots can now write essays that ape student writing, right down to typos, ESL errors, and words and sentences that reflect specific grade levels. Moreover, most students will swear they wrote every word, when you confront them—usually backed up by indignant parents.

So how do we prove our students used a bot to write their assignments?

Using Bots to Catch Bot Writing

One way to get proof is to use an AI detector. But, when you search for AI detectors, you confront a raft of websites, all claiming to detect AI-generated writing.

The good news: virtually all of them offer a free scan of pasted text.

The bad news: the quality of the detection can vary wildly.

AI detectors are only as good as their access to the AI models themselves. Moreover, their accuracy depends on the quality and volume of the human-authored and AI-generated texts run through the models.

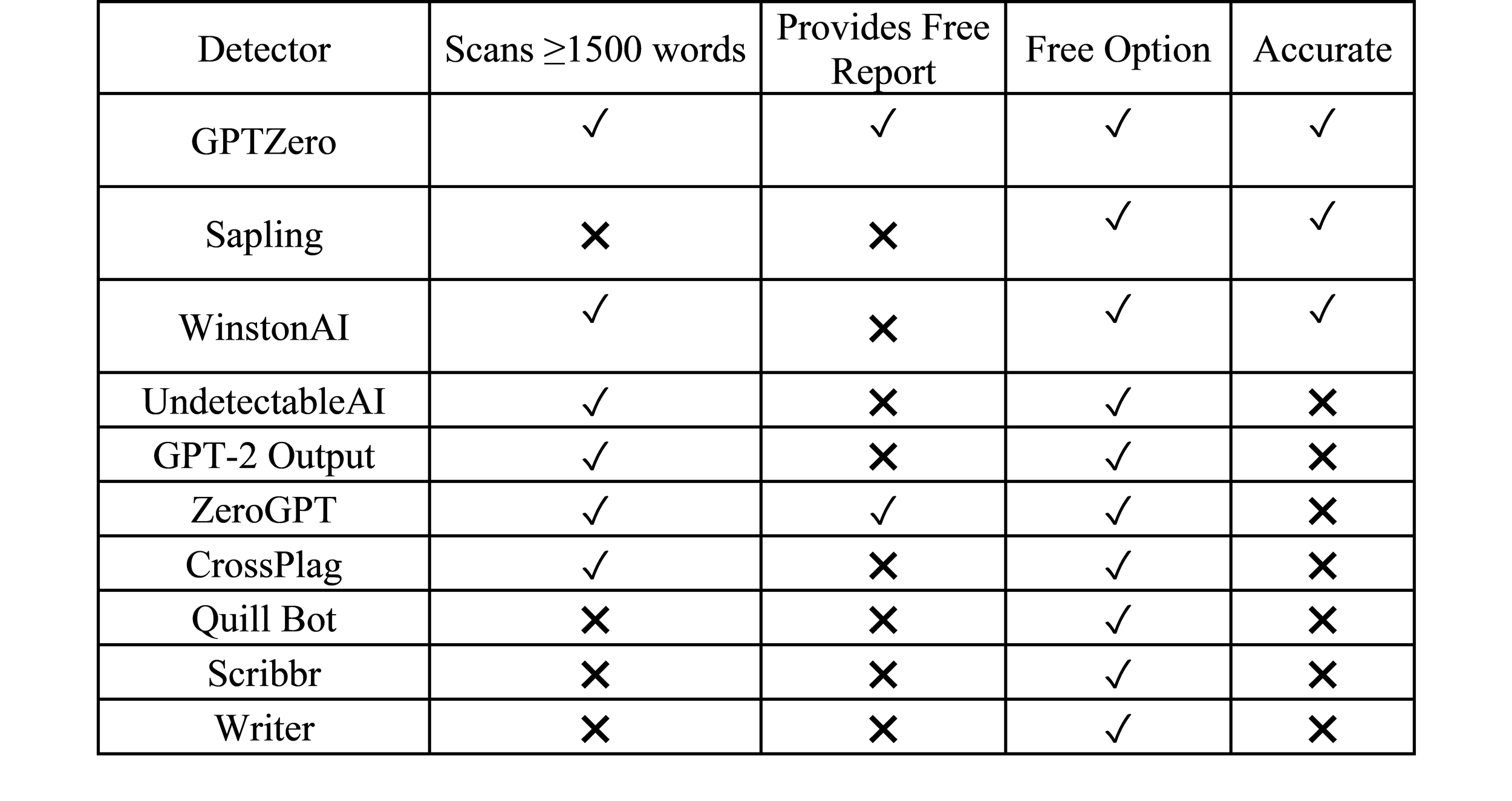

The best AI detector should offer four features enabling teachers to establish when students have submitted an AI-generated essay:

• Accurate detection

• Free report on writing

• Free (limited) scans

• Guest scans* of at least 25% of an assignment (≥1500 words).

*No signup or paid subscription required.

I ran ten scans of essays submitted by students: two written by humans and eight by students who admitted using AI to write essays after I easily spotted a major AI tell: they invented content that didn’t exist.. My results contrast dramatically with reviews by Forbes because I used essays on highly specific topics, not from generic prompts like Write an essay on the French Revolution [1].

The human essays were sophisticated and flawless. However, eight essays consisted of AI hallucinations. These hallucinations existed solely because the book I assigned to students had an non-existent online footprint.

Apparently, both AI and nature abhor a vacuum. Instead of reporting it has insufficient information on a source, an AI will always fabricate details.

AI essays written to generic prompts typically feature some distinctive tells like unusual consistency in the types of words and lengths of sentences. In addition, AI content is typically a summary of events, seldom focusing on a specific scene or detail.

Unsurprisingly, most AI detectors pick up this kind of content easily. On the other hand, when students write assignments in response to highly specific questions. When writing includes details and even quotes, these features apparently foil the primitive models most AI detectors rely on.

The top three AI detectors: GPTZero, Sapling, WinstonAI.

For example, nearly all the AI detectors failed to spot the short AI-generated essay submitted by my ordinarily punctuation-averse student—despite the essay fabricating every detail.

The Best AI Detectors

The first task involved analyzing an essay that was 100% AI-generated. However, only four AI detectors were reasonably accurate at this task:

GPTZero (97%)

Sapling (93.1%)

WinstonAI (92%)

CrossPlag (78%).

The Worst AI Detectors

On the other hand, on another essay that was 100% AI-generated), only GPTZero identified the essay as 100% AI-generated, while Sapling struggled (79.1%) and both CrossPlag (5%) and WinstonAI (1%) bombed.

A good set of tools can help teachers improve students’ writing. But a good set of strategies for dealing with AI works even more effectively.

The other AI detectors gave dismal performances. At best, they identified less than 10% of the essay as AI-generated. Scribbr (9%), Writer (9%), and Quillbot (9%) offered poor accuracy. But these detectors performed far better than the 0% detected by Undetectable AI, which, in this respect at least, apparently lives up to its name.

A good set of tools can help teachers improve students’ writing in the AI era. But a good set of strategies for dealing with AI works even more effectively. I’ll cover some of those strategies in my next article.

This article is the first of a series on Teaching ELA in the AI Era.

Footnotes

1. You can find the methods for testing used by Scribbr here, which also tellingly rank their own AI detector tops. Weirdly, Forbes seems to have accepted most of the statistics Scribbr published.